The latest PiP evaluation "strand report" has recently been published (WP7:39 Evaluation of impact on business processes). This strand of the PiP evaluation entailed an analysis of the business process techniques used by PiP, their efficacy, and the impact of process changes on the curriculum approval process, as instantiated by C-CAP. More generally this strand of evaluation was a contribution towards a wider understanding of technology-supported process improvement initiatives within curriculum approval and their potential to render such processes more transparent, efficient and effective. The evaluation yielded positive data on C-CAP's impact on the business process. C-CAP completely resolved (or partially resolved) recognised process failings, as identified in the PiP baselining exercise. C-CAP also demonstrated potential for improving process cycle time, process reliability, process visibility, process automation, process parallelism and reductions in transition delays, thus contributing to considerable process efficiencies. Of course, many other interesting discoveries were made. But this blog post is the first of two in which I would like to take the opportunity to highlight some of the more curious discoveries and intriguing observations to be made about this strand of evaluation.

The innovation and development work undertaken by PiP is intended to explore and develop new technology-supported approaches to curriculum design, approval and review. A significant component of this innovation is the use of business process analysis and process change techniques to improve the efficacy of curriculum approval processes. Improvements to approval process responsiveness and overall process efficacy can assist institutions in better reviewing or updating curriculum designs to enhance pedagogy. Improvements to process also assume a greater significance in a globalised HE environment, in which institutions must adapt or create curricula quickly in order to better reflect rapidly changing academic contexts, as well as better responding to the demands of employment marketplaces and the expectations of professional bodies. Of course, this is increasingly an issue for disciplines within the sciences and engineering, where new skills or knowledge need to be rapidly embedded in curricula as a response to emerging technological or environmental developments. To make matters more difficult still, all of the aforementioned must also be achieved while simultaneously maintaining high standards of academic quality, thus adding a further layer of complexity to the way in which HE institutions engage in “responsive curriculum design” and approval.

Partly owing to limitations in the data required to facilitate comparative analyses, this evaluation adopted a mixed approach, making use of a series of qualitative and quantitative methods as well as theoretical techniques. Together these approaches enabled a comparative evaluation of the curriculum approval process under the “new state” (i.e. using C-CAP) and under the “previous state”. The strand report summarises the methodology used to enable comparative evaluation and presents an analysis and discussion of the results; suffice to say that the evaluation approach used qualitative benchmarking and quantitative Pareto analysis to understand process improvements.

Qualitative benchmarking refers to the "comparison of processes or practices, instead of numerical outputs" and is a recognised general management approach, enjoying wide use in IT, knowledge management (KM), and, of course, within business and industrial process contexts. In essence, qualitative benchmarking necessitates the comparison of a previous situation or "state" with a current situation or "new state", or against established frameworks that define a state of "good practice". Data for qualitative benchmarking is generally gathered using interview approaches. Using a similar iterative interview approach, the PiP baselining exercise identified five key process and document workflow issues, therefore sufficiently characterising the critical aspects of the previous state (i.e. the current curriculum approval process). Data on this previous state was then used in the qualitative benchmarking process, using the process under C-CAP as the "new state".

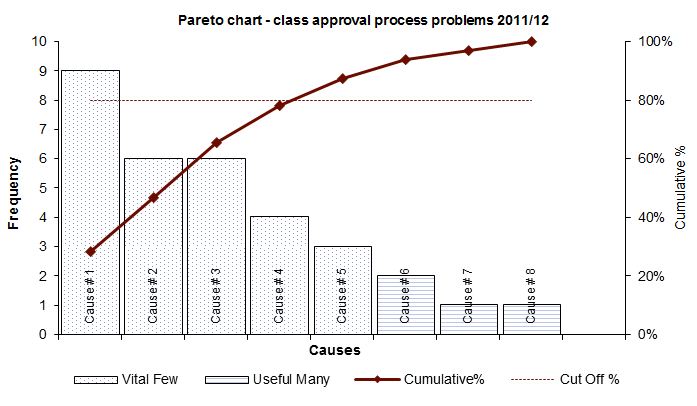

Pareto analysis is recognised as an important root-cause evaluation technique. The Pareto principle enjoys wide application across a disparate range of disciplines and states that for many events approximately 80% of the observed effects come from 20% of the causes. The purpose of Pareto analysis and charting is to identify the most important factors (within a large set of factors) requiring attention, thus enabling problems to be prioritised and monitored (e.g. most common sources of defects/errors, the highest occurring type of defect/error, etc.). (See an example Pareto chart from the strand report below) Data pertaining to the curriculum approval process in the Faculty of Humanities and Social Sciences (HaSS) during 2011/2012 was gathered *. Whilst such data is no substitute for genuine baselining data, its purpose in this instance was – via Pareto analysis - to identify significant problems within the current curriculum approval process and to use this problem data to assist in assessing the potential impact of C-CAP on approval processes.

The supposition when entering this evaluative strand was that the use of multiple evaluation approaches would assist in triangulation - and in any normal evaluation this would probably be the case; but what was found via qualitative benchmarking and Pareto analysis was quite different. In brief, the process and document management failings identified in the baselining exercise and considered to be the principal curriculum approval process impediments were not reflected in the Pareto analysis. Instead the Pareto analysis - which was based on objective measurements - exposed an entirely different series of process issues (or "causes", e.g. causes that lead to the delay or rejection of curricula). To be sure, some overlap in the results could be found; but overall these were two disconnected datasets.

The supposition when entering this evaluative strand was that the use of multiple evaluation approaches would assist in triangulation - and in any normal evaluation this would probably be the case; but what was found via qualitative benchmarking and Pareto analysis was quite different. In brief, the process and document management failings identified in the baselining exercise and considered to be the principal curriculum approval process impediments were not reflected in the Pareto analysis. Instead the Pareto analysis - which was based on objective measurements - exposed an entirely different series of process issues (or "causes", e.g. causes that lead to the delay or rejection of curricula). To be sure, some overlap in the results could be found; but overall these were two disconnected datasets.

But were they really disconnected? No. Instead the disjoint appears to have enriched the evaluation because it provided two differing perspectives of the same phenomenon: a philosophical perspective and a material perspective. It appears that both exercises (i.e. baselining exercise and Pareto analysis) examined curriculum approval processes from a qualitative perspective (philosophical) and a quantitative perspective (material) and in so doing identified different issues within the same process.

The perceived process issues - as identified by respondents in the baselining exercise - focused on the tacit, holistic, strategic and/or fundamental process issues (this could be considered our philosophical perspective), whilst the Pareto analysis - based on data gathered from the coalface of Academic Quality at HaSS - exposed important day-to-day issues which would otherwise evade treatment in any holistic or qualitative discussion of the approval process (our material perspective). In many ways it could be said that this philosophical-material scenario is cognate with the content of popular television programmes such as Supersize vs. Superskinny, whose participants offer philosophical reasons for their obesity/anorexia but are surprised at the material results of a food consumption diary. The objective results do not necessarily dismiss the philosophical explanations as there are numerous underlying psychological factors influencing eating disorders - and many of these are corroborated by their supervising GP; but it clarifies them and provides an additional perspective rooted in objectivity. The same is true of the curriculum approval process (and process change evaluation more generally), where mixing qualitative and quantitative data sources is considered essential to better understand process issues and to "give meaning" to numeric data; where process issues appear differently depending on whether you are a senior academic engaged in the philosophical aspects of the approval process or whether you are at the material coalface of academic quality. Mixing evaluation techniques is the only way of exposing this disparate data and understanding the true extent of process change, which is neither one that can be reduced to numbers nor one bereft of any objective underpinning.

*Thanks go to Bryan Hall (HaSS, Academic Quality Team) for kindly gathering this data.