A recent posting on the PiP blog outlined the use of heuristic evaluation techniques to optimise PiP’s Class and Course Approval Pilot (C-CAP) system prior to further evaluation. Heuristic compliance was considered imperative for two reasons: minimising users' extraneous cognitive load during user acceptance testing, and; optimising the user acceptance testing data. A report summarising the principal findings of the user acceptance testing has recently been published on the PiP website; but I promised last time in this blog to make some further comments about the user acceptance testing.

The most recent phase of the C-CAP evaluation was broadly termed "user acceptance testing"; however, the remit of this phase was far wider and was concerned with:

- Assessing the extent to which C-CAP functionality met users' expectations within specific curriculum design tasks

- Measuring the overall usability of C-CAP (e.g. interface design and functionality instinctive, navigable, etc.), capturing data on users' preferred system design/features

- Evaluating the performance of C-CAP in supporting curriculum design tasks and the approval process, as well as its potential for improving pedagogy

- Eliciting data on current approval processes and how C-CAP could contribute to improvements in the process

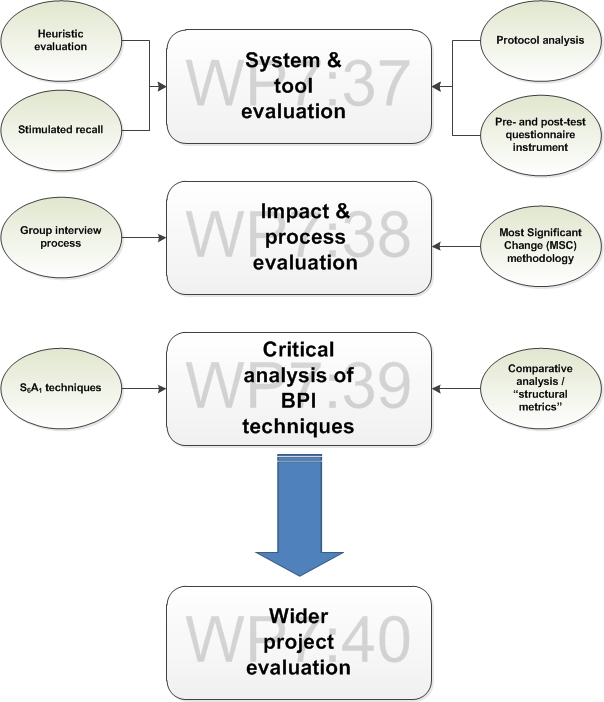

This phase of evaluation therefore focussed on a small but nevertheless important aspect of the overall PiP evaluation plan (see sub-phase diagram below). It was also the most data intensive strand of the PiP evaluation plan. Piloting of C-CAP within faculties will form the basis of the next evaluative strand (WP7:38 - Impact & process evaluation), in which rich qualitative data is expected to be gathered via group interviews and Most Significant Change stories; but even this phase will fail to generate the same volume of data as the user acceptance testing.

The evaluative approach adopted employed a combination of Human-Computer Interaction (HCI) approaches and specially designed data collection instruments, including protocol analysis, stimulated recall and pre- and post-session questionnaire instruments. It is impossible to summarise all data and findings in this brief blog post; many interesting discoveries were made and I encourage readers of this blog post to seek the associated report. Instead I want to take this opportunity to reflect on one of the report's principal findings: the dichotomy that exists between the system (which received generally positive feedback) and the overall curriculum design process (which was less well received).

The evaluative approach adopted employed a combination of Human-Computer Interaction (HCI) approaches and specially designed data collection instruments, including protocol analysis, stimulated recall and pre- and post-session questionnaire instruments. It is impossible to summarise all data and findings in this brief blog post; many interesting discoveries were made and I encourage readers of this blog post to seek the associated report. Instead I want to take this opportunity to reflect on one of the report's principal findings: the dichotomy that exists between the system (which received generally positive feedback) and the overall curriculum design process (which was less well received).

Overall, the C-CAP system was well received by study participants. For example, using quantitative measures such as Brooke's System Usability Scale (SUS) and Bangor et al.'s Adjective Rating Scale (ARS) we found that C-CAP achieved a mean SUS score of > 73 (M = 73.5; SD = 16.12; IQR = 16.25). This SUS score increased when outlying data were removed. An associated ARS rating of 5 ("Good") placed C-CAP within the 3rd quartile of Bangor et al.'s system acceptability ranges. All of this points to a system that should be considered "promising" and one that, in commercial parlance, is "ready to go to market". Although some system-based issues were identified using protocol analysis and stimulated recall data, the overall picture that emerged from the qualitative data was largely positive.

Participants' perception of the existing curriculum design and approval process was generally quite negative. Responses from the pre-session questionnaire instrument indicated that few were satisfied with the status quo. In particular, participants were inclined to view the current process as onerous and stifling class/course design, and in need of improvement to render it more efficient and responsive to the changing demands of industry and the employment market. All of this tended to imply that participants would be responsive to an online system designed to ameliorate these process issues; yet – as was to be discovered through qualitative data analysis - the demands of the University’s policies and regulations on curriculum approval meant that many participants were unconvinced of the process, irrespective of the system delivering it.

Anecdotal evidence indicated that those participants who had been exposed to the curriculum approval process from a managerial perspective (e.g. as a Head of Department or Vice Dean) were most encouraged by the potential of C-CAP to assist in the approval process; their views clearly influenced by their professional practice and an holistic understanding of the approval process issues involved. Whilst the other academic users lacked this insight, data from both quantitative and qualitative sources indicated that all participants were dissatisfied with the existing process, tacitly acknowledging that adjustments and improvements were justified. At many stages in their interactions with the C-CAP system participants were not required to produce more information than they otherwise would; yet the demands of the University’s policies and regulations on curriculum approval meant that many participants were sceptical of the overall process, as facilitated by C-CAP. In this respect it could simply be that the forms served by C-CAP – although based on existing curriculum descriptors – were sufficiently different to give the impression that large amounts of additional data was being collected. It could also be surmised that the pressures of increased teaching loads and departmental research expectations have made academics increasingly sceptical of the merits of new IT systems; but, as documented in the report, hostility to improved specificity in curriculum design has links to strongly held views on academic freedom and attitudes that novel educational concepts are alien to – or have no place in - HE teaching contexts.

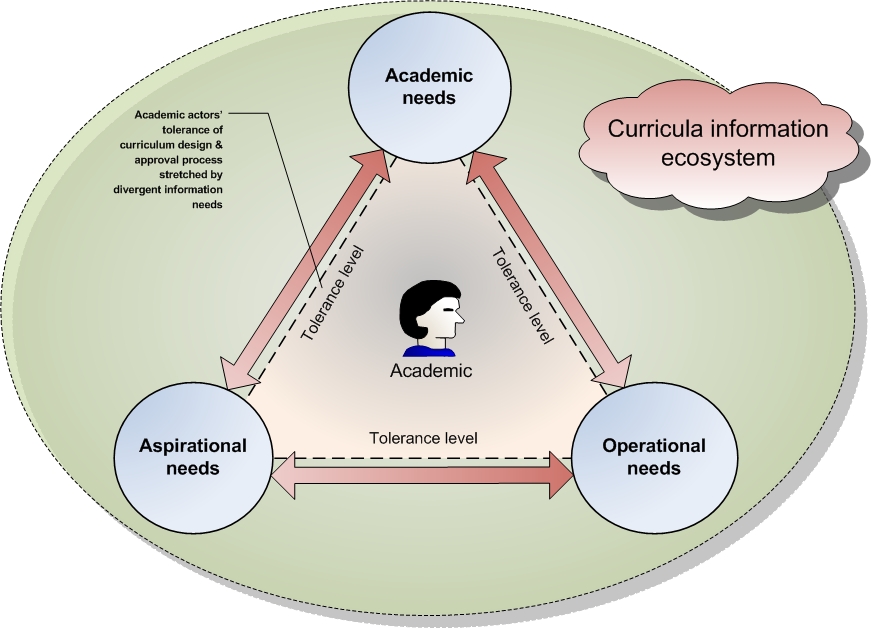

Jim Everett and I have been reflecting on this particular finding in recent weeks. As seductive as the above noted dichotomous scenario may appear, we suspect it is a little reductionist and probably unrepresentative of reality. This reductionism is not the result of any data misinterpretation; the data from this phase of the evaluation does expose two opposing perspectives (i.e. system versus process) that warrant further exploration in the next evaluative strand (WP7:38 – Impact & process evaluation). But our experiences - and particularly Jim's, given his lengthy involvement with PiP and its stakeholders – suggest that there are in fact three conflicting "information needs" within the process perspective. These information needs could be described as three divergent sub-perspectives, all existing as part of an information ecosystem and all underpinning the wider process perspective. These divergent information needs have been conceptualised in the "three orbs" model proposed below.

The model is characterised by three divergent information needs, each associated with the curriculum design and approval process and each pulling away from each other. As these divergent needs pull away from each other the tolerance levels of the academic actors situated at the centre of the model become stretched as they attempt to satisfy these disparate information needs. These needs are information needs that are required during curriculum design in order to facilitate the approval process. All the needs form part of a curricula information ecosystem. A successful framework for curriculum design and approval is therefore one that can balance these divergent needs and ergo deliver a system and process that lies within actors' overall tolerance levels. Failure to achieve equilibrium (i.e. an imbalance in the information ecosystem) may foster the development of ill-conceived curricula and lead to cynicism about the overall process as academics' tolerance levels decline.

The three divergent information needs inhabiting the curricula information ecosystem are as follows:

- The top orb denotes the Academic information need. This orb represents academics' need to design the substantive intellectual content of curricula such that it reflects current discipline specific trends or requirements, the demands of industry, employers, professional bodies, etc. This would include important aspects of the pedagogy such as aims, learning outcomes, proposed learning activities, and assessments. Such information is obviously important for satisfying the requirements of academic quality committees within University faculties.

- The Operational information need represents the essential operational information required to facilitate the approval and delivery of curricula, e.g. the business case for the new curricula, how it complements existing curricula and supports the faculty teaching portfolio, recruitment potential, resource requirements (e.g. teaching space, technology, staffing, etc.), etc. This information tends to satisfy the need to resource and plan curriculum delivery and, in some cases, is considered separately by faculties.

- Aspirational information needs often appear arcane to academics but represent an important goal for most Universities, many of which now operate in a globalised HE environment. Such aspirational information is normally requested from the centre and assists institutions in effecting improvements in pedagogy, operational efficiency and ultimately the student experience. Within the PiP context at the University of Strathclyde this includes bodies such as the Student Experience and Enhancement Services Directorate (SEES), which seeks to monitor, govern and improve academic quality, learning technology enhancement, educational strategy and so forth. This can encompass information on the extent to which academics will adhere to University policies on assessment and feedback, greater specificity in assessments and their alignment with learning outcomes, detail on how curricula will be evaluated, etc.

The aim for PiP is therefore to foster a system that supports the balancing of these divergent information needs and the process that underpins them. Only then can there be a balance between the system and the process it promotes.

This model should form a useful conceptual framework for guiding future evaluative strands, particularly the faculty piloting of C-CAP (WP7:38, 39). It will guide data collection during the group interviews and will aid subsequent qualitative data analysis. As with all conceptual models, it is anticipated that it will develop and become more sophisticated as more is understood about the curricula information ecosystem. The model may also resonate with others who are familiar with the curriculum design and approval processes within other universities. An obvious question for the astute reader might be: why was greater evidence of the "three orbs" not visible in the data from this phase of evaluation? The simple answer is that the methodology was not designed to elicit data on this issues and of such specificity. It was, however, specific enough to indicate that there was a general dichotomy between the system and the process and that this conflicting relationship requires greater understanding. And, with the benefit of this model, understand it we shall.