You might recall that during the PiP evaluation phase C-CAP was exposed to a period of user acceptance testing, which generated a detailed evaluation "strand report" (Evaluation of systems pilot (WP7:37) - User acceptance testing of Course and Class Approval Online Pilot (C-CAP)). The findings from this strand of the evaluation, like all others, have gone on to inform the development of C-CAP and other aspects of the PiP Project. They have also informed others active within the area of tech-supported curriculum design.

As part of our desire to monitor user acceptance -- and to satisfy our curiosity -- we decided to launch a system usability / perception questionnaire on C-CAP. The questionnaire reused most of the same instruments used in the user acceptance testing for comparative purposes (including Brooke's System Usability Scale (SUS) and Bangor et al.'s Adjective Rating Scale (ARS)) and was open to all C-CAP users for 5-6 weeks. The questionnaire was also designed to capture additional qualitative data about the user experience and, in essence, provide some of the qualitative data we managed to get from the WP7:38 strand report (WP7:38 Impact and process evaluation - Piloting of C-CAP: Evaluation of impact and implications for system and process development).

The biggest difference to note is how the questionnaire instrument was administered. For WP7:37 it was in a controlled lab setting, the preferred context for usability testing; but for this current round of data collection the questionnaire was self-administered and "remote" (i.e. not necessarily related to a particular user task). In actual fact, such self-administered / remote approaches are generally avoided in usability and system testing owing to the issues of non-response bias. This was anticipated prior to launching the questionnaire and is the hazard of using self-administered approaches in user acceptance testing. Data is generally only trusted from such instruments in controlled lab settings in which participants, whether they loved or hated a system, are forced to respond after completing a specified user task. (In WP7:37 the task was the design and completion of a class design) We nevertheless pressed ahead because we were curious to view the results but were also interested in capturing some additional qualitative feedback.

Looking at the data the first thing to report is that despite C-CAP now enjoying a large and diverse user base (conservative estimates are placed at around 350 users), only 25 usable questionnaire submissions were made. This is equivalent to a response rate of 7%. As Andreasen et al. have noted, such rates are unsurprising in self-administered user acceptance testing and, like them, we weren't surprised by the poor response rate!

The overall mean SUS score this time was 62.5 (SD = 22.57; IQR = 27.5), which is lower than the data gathered previously (SUS = 73.5; SD = 16.12; IQR = 16.25) but not statistically significant (t(24) = 1.52, p > .05); although, as you’ll notice, there is far greater data dispersion in the latest results, most probably a product of non-response bias. It also noteworthy that the SUS score is closer to 70 when outliers are removed from the dataset. The ARS rating of C-CAP within the latest results yielded a mean of 4.32 (SD = 1.43), compared to the slightly higher figure in WP7:37 (ARS M = 4.7; SD = 1.42). Some further data analysis reveals that SUS scores predicted ARS ratings, so the responses from users appear to be consistent across both instruments. Perception questions relating to how C-CAP supports curriculum design and approval were also collected and interestingly were much the same or slightly better than in WP7:37, with overall agreement that C-CAP supports curriculum design and approval and reduces the administrative load, which continues to be encouraging.

The SUS and ARS scores are a little disappointing and difficult to accept, particularly as numerous system and usability improvements have been made since WP7:37. It is easier to show that a system is unacceptable than it is to show that it is acceptable, particularly if one wants to be confident in the conclusions one is making! This must be kept in mind when determining acceptability based on SUS scores, unless unusually large numbers of participants are tested in a controlled setting. Of course, an overall SUS score below 50 points to severe user acceptance problems on the horizon – and the data in this instance is nowhere near this usability nadir; but it remains apposite to state that even scores in the 70s and 80s, although promising, do not necessarily guarantee high acceptability in the wild.

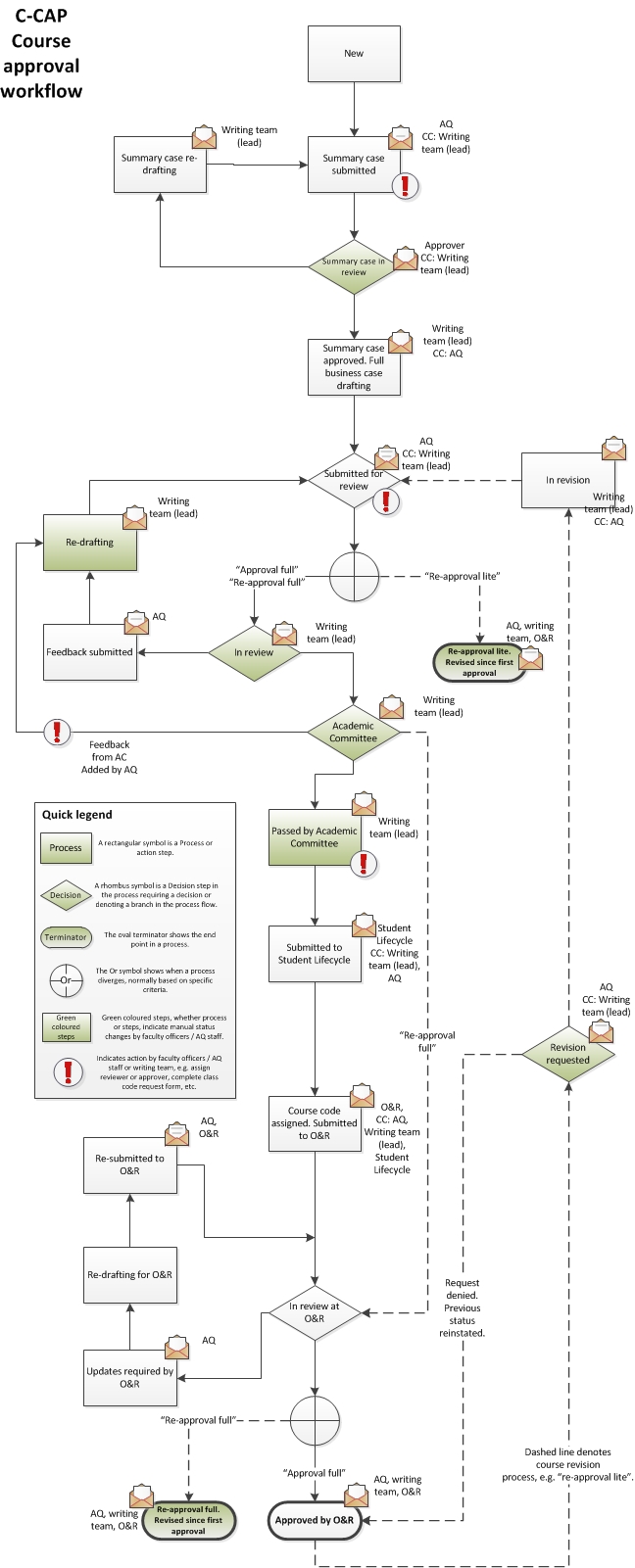

And that's why qualitative data is used to supplement SUS and ARS! Although on this occasion we didn't have the resource for protocol analysis, stimulated recall or the focus groups used in WP7:38, we did include extra sections of the questionnaire to capture some qualitative data. The PiP team already has an ever expanding list of future system improvements (to be addressed or fixed in future), and it was therefore pleasing to see respondents identifying some of these same issues. This included improving the linkage between classes and courses so that flicking between, say, a class and the course the class might form part of (and vice versa) is quicker and simpler (currently a bit tedious to do on C-CAP), and improved functionality to enable design "cloning" (i.e. the ability to reuse existing curriculum designs quickly). Incidentally, this latter popular request is something we hope to implement soon, along with our "re-approval lite" functionality. The workflow diagram for this functionality is provided in the image (note: nodes are analogous to visible statuses on the C-CAP system). But it's also worth mentioning that for some users C-CAP was "very easy to use"!

The qualitative data does, however, now reveal a latent (and perhaps lingering) system resistance. Those most hostile to the system (based on their SUS and ARS scoring) were those respondents who then go on to explicitly mention their preference for using MS Word in curriculum design tasks. Of the 7 respondents that expressed a preference for MS Word in their qualitative comments, 5 generated overall US scores of < 50, suggesting that resistance to alternative design tools was largely motivating their low levels of system acceptance.

Leaving aside the institutional reasons for capturing better, more structured curriculum data and the HE-wide legal demands mandated by the Key Information Sets (KIS), the issues surrounding single-user applications (like MS Word) in business and organisational contexts were discussed in a previous Blog post about system resistance and MS Word, itself emerging as a result of previous evaluation activity (specifically WP7:37 and WP7:38). Here is a short snippet about the general issues surrounding single-user applications I posted on the Blog, exploring the motivations behind system resistance:

The issues surrounding collaborative working with single-user applications (such as MS Word) has been extensively reviewed in the literature, particularly by researchers focusing on their use within business and organisational contexts. For example, some researchers summarise the problems intrinsic to collaborative working with single user applications, highlighting a lack of collaborative transparency (e.g. understanding the activities of others to avoid duplication of – or neglecting - work) and version control as particular issues. Others note the inefficient and "error prone" use of MS Word and email in the collaborative design of ethics protocols. Whilst better integration of single-user a pplications is now offered by document management and sharing platforms (e.g. MS SharePoint), the information and data contained within any uploaded documents often lacks structure and therefore evades most types of extraction or computation. C-CAP has been expressly designed to resolve the information management issues that commonly result from unstructured information, poor version control and inadequate collaborative working mechanisms.

pplications is now offered by document management and sharing platforms (e.g. MS SharePoint), the information and data contained within any uploaded documents often lacks structure and therefore evades most types of extraction or computation. C-CAP has been expressly designed to resolve the information management issues that commonly result from unstructured information, poor version control and inadequate collaborative working mechanisms.

The issues surrounding single-user applications were something C-CAP was created to eradicate and it has certainly been successful (see the Final project evaluation synthesis (WP7:40), for example). The C-CAP embedding activity has also focussed on addressing some users' attachment to MS Word and, as we reported, pre-emptive measures were and continue to be taken. Are these users then simply "laggards" in technology diffusion parlance, destined to accept the system eventually (see image); or are more innovative user advocacy methods required? Answers on a postcard!